Watching Apache Website Logs in Real Time with psBASIC

Watching hits on sites

Keeping an eye on things

A *NIX server administrator has a lot of tools available for processing text based log files. But often times they don't work quite as well as we'd like in real-time. Its easy enough to grab slice and grep for specific bits of data. And there are some tools like "goaccess" that can provide phenomenal real-time output of specific sets of stats. All of these tools have their place and are very helpful. But there are times where you just simply need to see something other than what comes pre-canned.

Parsing Apache logs has always been somewhat problematic. I've written dozens of tools over the years to deal with them. At their core they are simple space separated value files, with some fields enclosed in quotes. Some fields are not. Blank fields are filled with "-". Usually quotes within a field are escaped with a backslash (\). Ordinarily AWK would be the tool I'd reach for when trying to extract data from text files. To me it's a kind of "SQL" for text tables. But its never really grown up and handled the optional enclosing characters. There are ways around these limitations that all suck to some degree, which is why I've written sooo many tools over the years.

NOTE: Web servers other than Apache can and do put out similar content.

psBASIC has now become my preferred tool for creating ad-hoc views of this data or even as a pre-filter for AWK. Nothing beats the conciseness of the AWK language for these kinds of tasks. psBASIC works well in a controller, filter or a terminal dashboard role to help get to the information one might want.

The DELIMIT command of the professional edition (requires purchase) makes breaking the fields of the log lines apart quite simple

DELIMIT #1, " ""\"

psBASIC uses the "" syntax, instead of the \" syntax to escape quotes. So that string is three characters: space, quote and backslash. This defines an ASCII data file separated by space (character 1) with fields optionally enclosed in quotes (character 2) and the quotes are escaped with a backslash (character 3). One could argue this still leaves the timestamp field, which is traditionally enclosed in square brackets, un-handled. However I find that acceptable since I would usually split the time zone out anyways. And this solution still handles the bulk of the splitting work for us.

My Apache and NginX logs use this field arrangement:

- Client address

- Site name (for multiple vhost serving)

- Authenticated user's name (almost always blank, well ... "-")

- Timestamp with "[" prefix

- Timezone with "]" suffix

- Request

- Response code

- Response size

- Referring URL (referrer)

- User Agent (UA) string

- Seconds processing (custom to me)

- Session ID (custom to me, "usertrack" module)

Other than the last two fields I think this used to be the standard multi-hosting format waaaay back when. It seems to me the default has changed but I've been configuring my servers this way for 20 or so years. I have a lot of code and configurations geared to this field arrangement. So I stick with it.

A simple log read example

DELIMIT #1," ""\" : 'Apache space separated with C style escape

OPEN "i",#1,"/var/log/apache2/access.log"

DO UNTIL eof(1)

INPUT #1,ip$,site$,auth$,tm$,zn$,req$,code%,sz%,refer$,ua$,dur%,ses$

'...

LOOP

CLOSE #1

That's it!

In this case I could have used the MAT commands to read the fields into an array, which is more forgiving about broken data. But in this case I wanted to access fields by name and have the integer values converted into integer variables without more commands.

Using an array would look like

DIM log$(12) : '12 fields in the log lines

DELIMIT #1," ""\" : 'Apache space separated with C style escape

OPEN "i",#1,"/var/log/apache2/access.log"

DO UNTIL eof(1)

MAT INPUT #1,log$ : 'read log line and split fields into elements of log$()

'...

LOOP

CLOSE #1

This will read up to 12 space separated values into the log$ array starting at element 1. The numbers from the list above would be the matching array index for each field. But then you'd have to convert string numbers to numeric values to do math or perform decimal comparisons on them. Still, this can be a very handy way to do things too!

What if the log file is compressed?

DELIMIT #1," ""\" : 'Apache space separated with C style escape

OPEN "i",#1,"|zcat /var/log/apache2/access.log-1.gz"

DO UNTIL eof(1)

INPUT #1,ip$,site$,auth$,tm$,zn$,req$,code%,sz%,refer$,ua$,dur%,ses$

'...

LOOP

CLOSE #1

This uses the "pipe open" feature of psBASIC which uses a syntax similar to TCL to perform an equivalent operation as the C and Python popen() functions. This will open the zcat command for reading and tell zcat to open our compressed log file. Because zcat operates like its namesake cat, we could actually have it decompress multiple logs allowing us to pass from one to the other without our program needing to be concerned about switching files. Our data processing logic works the same whether the log is compressed or not.

What if the log is live on another server?

DELIMIT #1," ""\" : 'Apache space separated with C style escape

OPEN "i",#1,"|ssh webserver tail -f /var/log/apache2/access.log"

DO UNTIL eof(1)

INPUT #1,ip$,site$,auth$,tm$,zn$,req$,code%,sz%,refer$,ua$,dur%,ses$

'...

LOOP

CLOSE #1

Once again our data processing logic does not need to know the log is on another machine. You will have to have SSH keyed logins setup for the other machine. The user account that you're using to login needs access to the log files. Pretty standard stuff.

Varying the tail command passed to the remote machine will vary the amount of history you're processing. tail -n +1 -f will always start from the beginning of the log and continue indefinitely. tail -n 0 -f will always handle from now on, without any history. Obviously you can also pass a zcat command in the ssh command to decompress older logs.

From any of those examples its just a matter of catching the data events you want and spitting the desired stats out.

Example of counting hits per host

DEFINT a-z

DIM sites$(20),hits%(20)

site_ct%=0

DELIMIT #1," ""\" : 'Apache space separated with C style escape

OPEN "i",#1,"|ssh -C webserver tail -n +1 -f /var/log/apache2/access.log"

10 DO UNTIL eof(1) : ' Should never happen

INPUT #1,ip$,site$,auth$,tm$,zn$,req$,code%,sz%,refer$,ua$,dur%,ses$

GOSUB 1000

IF time>t THEN GOSUB 2000 : t=time+300

LOOP

CLOSE #1

END

'*** add hits ***

1000 FOR s=0 TO site_ct%-1

IF sites$(s)=site$ THEN INC hits%(s) : RETURN

NEXT

sites$(s)=site$

hits%(s)=1

INC site_ct%

RETURN

'*** display ***

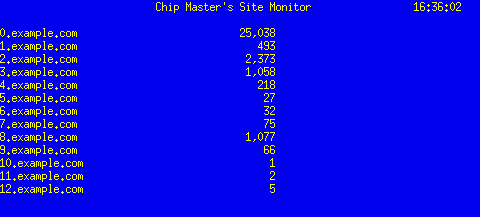

2000 CLS

LOCATE 1,27 : PRINT "Chip Master's Site Monitor";

LOCATE 1,70 : PRINT time$;

PRINT

PRINT

FOR x=0 TO site_ct%-1

PRINT sites$(x);TAB(40);dec$(hits%(x),"###,###")

NEXT

RETURN

A few notes about the code:

- The

ssh -Ctells SSH to compress the channel, making for faster data delivery. - The

IF time>t THEN ... : t=time+300is a KISS way to keep the display updates to no more than once every 3secs, leaving more time for analyzing data. It takes time to write to the screen. It also eliminates screen strobing from my simplistic screen write. - The psBASIC

timefunction returns the current UNIX timestamp in hundredths of seconds. This means that 300 is 3 seconds. - The subroutine at line 1000 implements a crude data dictionary. You'll have to change the

DIMcommand at top if you expect more than 20 sites. But then you'd need a larger terminal too. tailwon't follow log rotation. So you need to restart the program after log rotations. This could be automated withON ... TIMER. If it stalls for too long close and re-open. Or you could use thetimeortime$functions to close and re-open at the expected log rotation time.

I use code similar to this to monitor hits on key URLs on sites that I'm concerned about. If numbers start jumping up too quickly I know I need to block someone trying to break the site. And if the situation warrants I could also detect heavy traffic and play an alarm sound. With the appropriate hardware... strobe lights?

Get your copy of Pi Shack BASIC here!