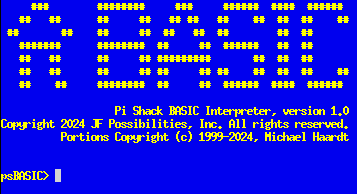

Pi Shack BASIC Helps with the Too Many Files Problem

Pi Shack BASIC is a Ready Tool in Times of Need

If you've been a Linux administrator for a significant amount of time you have probably run into the "too many files" problem. That time when you try to do something with a large list of files and the shell squawks about "too many arguments". This is caused by the fact that the kernel has limits on how many arguments it can pass to a program. If there is large set of filenames to be passed you can easily exceed that limit. psBASIC can be a significant help in this situation.

There are many symptoms that can occur with a large number of files. Linux is actually pretty darn good in this situation. You can read older UNIX writings and it was a known issue that a lot of files in a folder usually worked poorly (read slowly). Now most common file systems use either hashes or B-Trees to speed up finding those needles in the haystack.

Still large file counts can lead to problems. ls with an appropriately large number of files can appear to hang as it attempts to load all the names into RAM and sort them. This can be exacerbated if you're in a crisis situation and short on RAM.  I've had to ^C

I've had to ^C ls after waiting several minutes to see what was in a directory. ls -f can help with that if the file count isn't too large (hundreds of thousands).

Since the use of wildcards in the shell does pretty much the same thing you have a similar problem albeit without the added overhead of using stat(2). Then when you attempt to pass that list to a program each name is turned into an argument and *BAM* you hit the limit error.

There are always many ways to work around these sorts of things. And you will certainly find many useful tips on other blogs across the iNet. Each with their own pros, cons and risks. In some cases I've needed to write my own tools to gain access to the list of files that needed to be dealt with. A good system operator will certainly have a few recipes laying around in their brain for such a time. psBASIC makes a handy survival mode "swiss army knife".

Overloaded directory of disposable files

One of the first times I ran into this issue I had 3,000,000+ PHP session files  that had stacked up in a directory.

that had stacked up in a directory. rm * wouldn't work. Too many arguments! And I needed to keep the directory in place so current live sessions could be created. psBASIC can handle this situation quite easily:

DO

f$=find$("*",2)

IF f$="" THEN EXIT DO

DELETE f$

LOOP

Notes:

find$looks for the nth occurrence of a matching file and does not hide hidden files.- I look for the 3rd file (2) because in all the Linux filesystems I currently use the first (0) and second (1) are always "." and ".." respectively. You can't delete those.

- I never look beyond the 3rd file because I just deleted it! The next time through the 3rd file will be something else.

find$()returns an empty string when there are no more matches.

find$() does not load and cache file names, unlike languages like Python. This can be an advantage and a disadvantage. In this scenario its definitely an advantage, since I'm not loading the whole list into RAM. I'll start relieving the stress on the system right away!

What about selective removal?

This past Friday I ran into a situation where a user's inbox got flooded with a ton of copies of the same email. Ton = ~200,000!  When their email reader was gagging on it and Dovecot IMAP was griping it needed more RAM than the set limit, something needed to be done!

When their email reader was gagging on it and Dovecot IMAP was griping it needed more RAM than the set limit, something needed to be done!

It was obvious I needed to flush the inbox but I also wanted to review the emails to see what on earth caused the tsunami. So I chose to scan all the email headers and if they were from the offending party, move them to a quarantine folder for further review. Obviously there were going to be too many files in the new folder too. But I can manipulate them easily enough in psBASIC, so, no biggie!

quarantine$="/some/directory/" : 'move emails to here

bad_header$="From: joe@no.mo.spam.com" : 'If they have this header.

ct=0

DO

x=2 : cct=0

DO : 'the main directory walk

f$=find$("*",x)

IF f$="" THEN EXIT DO : 'all done this pass

OPEN "i",#2,f$

DO UNTIL eof(2) : 'read & check the email headers.

LINE INPUT #2,l$

IF l$="" THEN EXIT DO : 'end of headers. NEXT!

' Test for the offending header.

IF l$=bad_header$ THEN

' WE HAVE A WINNER!!!

RENAME f$ TO quarantine$+f$

INC ct,cct

DEC x : 'keep checking the same index until its not a match

PRINT ct;chr$(13);

EXIT DO

END IF

LOOP

CLOSE #2

INC x

LOOP

LOOP WHILE cct

PRINT

PRINT ct;"of";x

The operation of this program may seem a bit cryptic due to its optimization. Basically it operates like this:

- The outer most loop runs until there are no more matches (cct=0).

- The second loop walks down the directory looking for matches.

- The third loop opens the email file and checks the headers for one that matches.

- If the header isn't found and we hit the end of the headers (denoted by an empty line, RFC822) we break out of the loop, close the file and move on to the next.

- If the header is found then use

RENAMEto move the file to the quarantine, close the file and recheck the current nth file. This is accomplished with theDEC xthat runs prior to theINC x.

The driving factors for this design:

- As I mentioned above

find$()does not cache or load directory entries ahead of time. Sofind$("*", 100000)has to read 100,000 or more entries from the directory, if there are that many. So as much work as we can do keeping that second number low the faster our code runs, especially if the disk is flailing. - When moving the files out of the directory they naturally disappear. Lets say

find$("*",2)found a file we need to get rid of. Then re-runningfind$("*",2)will provide the next possible target. Staying in the low numbers is faster. - Because of the files being removed in #2 a straight pass through directory (x goes from 2 to however many) some files will get skipped. So we use the outermost loop to check again until no more are found.

In this situation I knew the super-vast-majority of the files needed to go. In the end only about seventy out of nearly 200,000 remained. If I knew a large portion of them would remain I'd have written the targets to a list to be processed in a second step. This would prevent skipping files and limit the passes over the directory to one. Because there were so few keepers as I suspected this was a really fast pain reliever.

NOTE: I can hear "you could grep". Three main issues with that: the first is the "too many arguments" problem... but yeah there are ways around that. The second is that grep is unaware of the format of emails and could match on content that is undesirable. This is especially true with forwards and replies that could contain the header in the mail body. Lastly by limiting the search to just header fields, which is all I needed, I slurped up far less megs, meaning it was less time to pain relief. You use the tool that works best for the job. This time that was psBASIC.

Get your copy of Pi Shack BASIC here!